In part 1 of the series, we covered some of the changes behind Vista’s new Session 0 Isolation and showcased the UI Detection Service. Now, we’ll look at the internals behind this compatibility mechanism and describe its behavior.

First of all, let’s take a look at the service itself — although its file name suggests the name “UI 0 Detection Service”, it actually registers itself with the Service Control Manager (SCM) as the “Interactive Services Detection” service. You can use the Services.msc MMC snap-in to take a look at the details behind the service, or use Sc.exe along with the service’s name (UI0Detect) to query its configuration. In both cases, you’ll notice the service is most probably “stopped” on your machine — as we’ve seen in Part 1, the service is actually started on demand.

But if the service isn’t actually running yet seems to catch windows appearing on Session 0 and automatically activate itself, what’s making it able to do this? The secret lies in the little-known Wls0wndh.dll, which was first pointed out by Scott Field from Microsoft as a really bad name for a DLL (due to its similarity to a malicious library name) during his Blackhat 2006 presentation. The version properties for the file mention “Session0 Viewer Window Hook DLL” so it’s probably safe to assume the filename stands for “WinLogon Session 0 Window Hook”. So who loads this DLL, and what is its purpose? This is where Wininit.exe comes into play.

Wininit.exe too is a component of the session 0 isolation done in Windows Vista — because session 0 is now unreachable through the standard logon process, there’s no need to bestow upon it a fully featured Winlogon.exe. On the other hand, Winlogon.exe has, over the ages, continued to accumulate more and more responsibility as a user-mode startup bootstrapper, doing tasks such as setting up the Local Security Authority Process (LSASS), the SCM, the Registry, starting the process responsible for extracting dump files from the page file, and more. These actions still need to be performed on Vista (and even more have been added), and this is now Wininit.exe’s role. By extension, Winlogon.exe now loses much of its non-logon related work, and becomes a more agile and lean logon application, instead of a poorly modularized module for all the user-mode initialization tasks required on Windows.

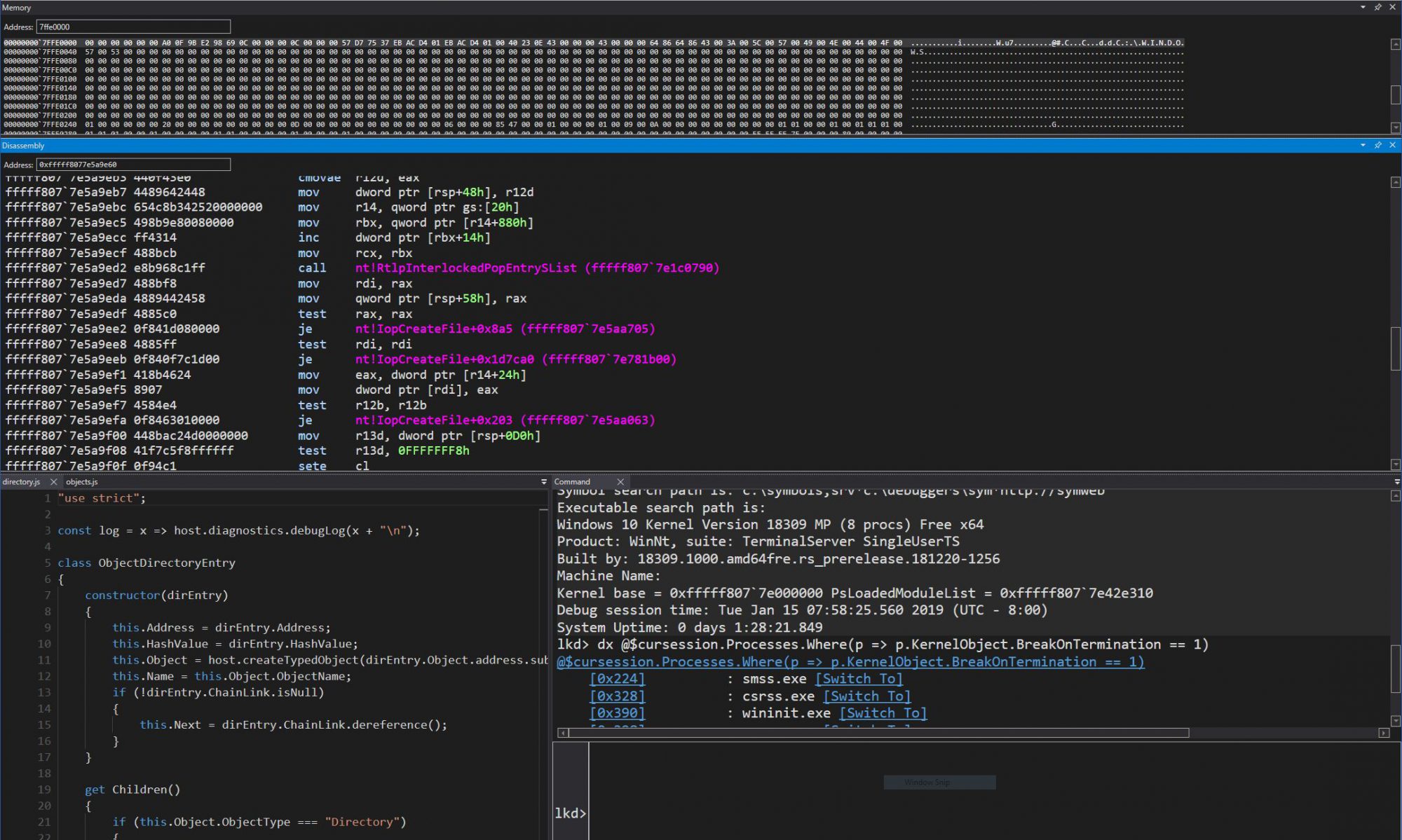

One of Wininit.exe’s new tasks (that is, tasks which weren’t simply grandfathered from Winlogon) is to register the Session 0 Viewer Window Hook Dll, which it does with an aptly named RegisterSession0ViewerWindowHookDll function, called as part of Wininit’s WinMain entrypoint. If the undocumented DisableS0Viewer value isn’t present in the HKLM\System\CurrentControlSet\Control\WinInit registry key, it attempts to load the aforementioned Wls0wndh.dll, then proceeds to query the address of the Session0ViewerWindowProcHook inside it. If all succeeds, it switches the thread desktop to the default desktop (session 0 has a default desktop and a Winlogon desktop, just like other sessions), and registers the routine as a window hook with the SetWindowsHookEx routine. Wininit.exe then continues with other startup tasks on the system.

I’ll assume readers are somewhat familiar with window hooks, but this DLL providesjust a standard run-of-the-mill systemwide hook routine, whose callback will be notified for each new window on the desktop. We now have a mechanism to understand how it’s possible for the UI Detection Service to automatically start itself up — clearly, the hook’s callback must be responsible! Let’s look for more clues.

Inside Session0ViewerWindowProcHook, the logic is quite simple: a check is made on whether or not this is a WM_SHOWWINDOW window message, which signals the arrival of a new window on the desktop. If it is, the DLL first checks for the $$$UI0Background window name, and bails out if this window already exists. This window name is created by the service once it’s already started up, and the point of this check is to avoid attempting to start the service twice.

The second check that’s done is how much time has passed since the last attempt to start the service — because more than a window could appear on the screen during the time the UI Detection Service is still starting up, the DLL tries to avoid sending multiple startup requests.

If it’s been less than 300 seconds, the DLL will not start the service again.

Finally, if all the checks succeed, a work item is queued using the thread pool APIs with the StartUI0DetectThreadProc callback as the thread start address. This routine has a very simple job: open a handle to the SCM, open a handle to the UI0Detect service, and call StartService to instruct the SCM to start it.

This concludes all the work performed by the DLL — there’s no other code apart from these two routines, and the DLL simply acknowledges window hook callbacks as they come through in cases where it doesn’t need to start the service. Since the service is now started, we’ll turn our attention to the actual module responsible for it — UI0Detect.exe.

Because UI0Detect.exe handles both the user (client) and service part of the process, it operates in two modes. In the first mode, it behaves as a typical Windows service, and registers itself with the SCM. In the second mode, it realizes that is has been started up on a logon session, and enters client mode. Let’s start by looking at the service functionality.

If UI0Detect.exe isn’t started with a command-line, then this means the SCM has started it at the request of the Window Hook DLL. The service will proceed to call StartServiceCtrlDispatcher with the ServiceStart routine specific to it. The service first does some validation to make sure it’s running on the correct WinSta0\Default windowstation and desktop and then notifies the SCM of success or failure.

Once it’s done talking to the SCM, it calls an internal function, SetupMainWindows, which registers the $$$UI0Background class and the Shell0 Window window. Because this is supposed to be the main “shell”

window that the user will be interacting with on the service desktop (other than the third-party or legacy service), this window is also registered as the current Shell and Taskbar window through the SetShellWindow and SetTaskmanWindow APIs. Because of this, when Explorer.exe is actually started up through the trick mentioned in Part 1, you’ll notice certain irregularities — such as the task bar disappearing at times. These are caused because Explorer hasn’t been able to properly register itself as the shell (the same APIs will fail when Explorer calls them). Once the window is created and a handler is setup (BackgroundWndProc), the new Vista “Task” Dialog is created and shown, after which the service enters a typical GetMessage/DispatchMessage window message handling loop.

Let’s now turn our attention to BackgroundWndProc, since this is where the main initialization and behavioral tasks for the service will occur. As soon as the WM_CREATE message comes through, UI0Detect will use the RegisterWindowMessage API with the special SHELLHOOK parameter, so that it can receive certain shell notification messages. It then initializes its list of top level windows, and registers for new session creation notifications from the Terminal Services service. Finally, it calls SetupSharedMemory to initialize a section it will use to communicate with the UI0Detect processes in “client” mode (more on this later), and calls EnumWindows to enumerate all the windows on the session 0 desktop.

Another message that this window handler receives is WM_DESTROY, which, as its name implies, unregisters the session notification, destroys the window lists, and quits.

This procedure also receives the WM_WTSSESSION_CHANGE messages that it registered for session notifications. The service listens either for remote or local session creates, or for disconnections. When a new session has been created, it requests the resolution of the new virtual screen, so that it knows where and how to display its own window. This functionality exists to detect when a switch to session 0 has actually been made.

Apart from these three messages, the main “worker” message for the window handler is WM_TIMER. All the events we’ve seen until now call SetTimer with a different parameter in order to generate some sort of notification. These notifications are then parsed by the window procedure, either to handle returning back to the user’s session, to measure whether or not there’s been any input in session 0 lately, as well as to handle dynamic window create and destroy operations.

Let’s see what happens during these two operations. The first time that window creation is being acted upon is during the afore-mentionned EnumWindows call, which generates the initial list of windows. The function only looks for windows without a parent, meaning top-level windows, and calls OnTopLevelWindowCreation to analyze the window. This analysis consists of querying the owner of the window, getting its PID, and then querying module information about this process. The version information is also extracted, in order to get the company name. Finally, the window is added to a list of tracked windows, and a global count variable is incremented.

This module and version information goes into the shared memory section we briefly described above, so let’s look into it deeper. It’s created by SetupSharedMemory, and actually generates two handles. The first handle is created with SECTION_MAP_WRITE access, and is saved internally for write access. The handle is then duplicated with SECTION_MAP_READ access, and this handle will be used by the client.

What’s in this structure? The type name is UI0_SHARED_INFO, and it contains several informational fields: some flags, the number of windows detected on the session 0 desktop, and, more importantly, the module, window, and version information for each of those windows. The function we just described earlier (OnTopLevelWindowCreation) uses this structure to save all the information it detects, and the OnTopLevelWindowDestroy does the opposite.

Now that we understand how the service works, what’s next? Well, one of the timer-related messages that the window procedure is responsible for will check whether or not the count of top level windows is greater than 0. If it is, then a call to CreateClients is made, along with the handle to the read-only memory mapped section. This routine will call the WinStationGetSessionIds API and enumerate through each ID, calling the CreateClient routine. Logically, this is so that each active user session can get the notification.

Finally, what happens in CreateClient is pretty straightforward: the WTSQueryUserToken and ImpersonateLoggedOnUser APIs are used to get an impersonation logon token corresponding to the user on the session ID given, and a command line for UI0Detect is built, which contains the handle of the read-only memory mapped section. Ultimately, CreateProcessAsUser is called, resulting in the UI0Detect process being created on that Session ID, running with the user’s credentials.

What happens next will depend on user interaction with the client, as the service will continue to behave according to the rules we’ve already described, detecting new windows as they’re being created, and detection destroyed windows as well, and waiting for a notification that someone has logged into session 0.

We’ll follow up on the client-mode behavior on Part 3, where we’ll also look at the UI0_SHARED_INFO structure and an access validation flaw which will allow us to spoof the client dialog.