The era of 64-bit computing is finally upon the consumer market, and what was once a rare hardware architecture has become the latest commodity in today’s processors. 64-bit processors promise not only a larger amount of registers and internal optimizations, but, perhaps most importantly, access to a full 64-bit address space, increasing the maximum number of addressable memory from 32-bits to 64-bits, or from 4GB to 16EB (Exabytes, about 17 billion GBs). Although previous solutions such as PAE enlarged the physically addressable limit to 36-bits, they were architectural “patches” and not real solutions for increasing the memory capabilities of hungry workloads or applications.

Although 16EB is a copious amount of memory, today’s computers, as well as tomorrow’s foreseeable machines (at least in the consumer market) are not yet close to requiring support for that much memory. For these reasons, as well as to simplify current chip architecture, the AMD64 specification (which Intel used for its own implementation of x64 processors, but not Itanium) currently only supports 48 bits of virtual address space — requiring all other 16 bits to be set to the same value as the “valid” or “implemented” bits, resulting in canonical addresses: the bottom half of the address space starts at 0x0000000000000000 with only 12 of those zeroes being part of an actual address (resulting in an end at 0x00007FFFFFFFFFFF), while the top half of the address space starts at 0xFFFF800000000000, ending at 0xFFFFFFFFFFFFFFFF.

As you can realize, as newer processors support more of the addressing bits, the lower-half of memory will expand upward, towards 0x7FFFFFFFFFFFFFFF, while the upper-half of memory will expand downward, toward 0x8000000000000000 (a similar split to today’s memory space, but with 32 more bits). Anyone working with 64-bit code ought to be very familiar with this implementation, since it can have subtle effects on code when the number of implemented bits will grow. Even in the 32-bit world, a number of Windows applications (including system code in Windows itself) assume the most significant bit is zero and use it as a flag — clearly the address would become kernel-mode, so the application would mask this bit off when using it as an address. Now developers get a shot at 16 bits to abuse as flags, sequence numbers and other optimizations that the CPU won’t even know about (on current x64 processors), on top of the usual bits that can be assumed due to alignment or user vs kernel-mode code location. Compiling the 64-bit application for Itanium and testing it would reveal such bugs, but this is beyond the testing capabilities of most developers.

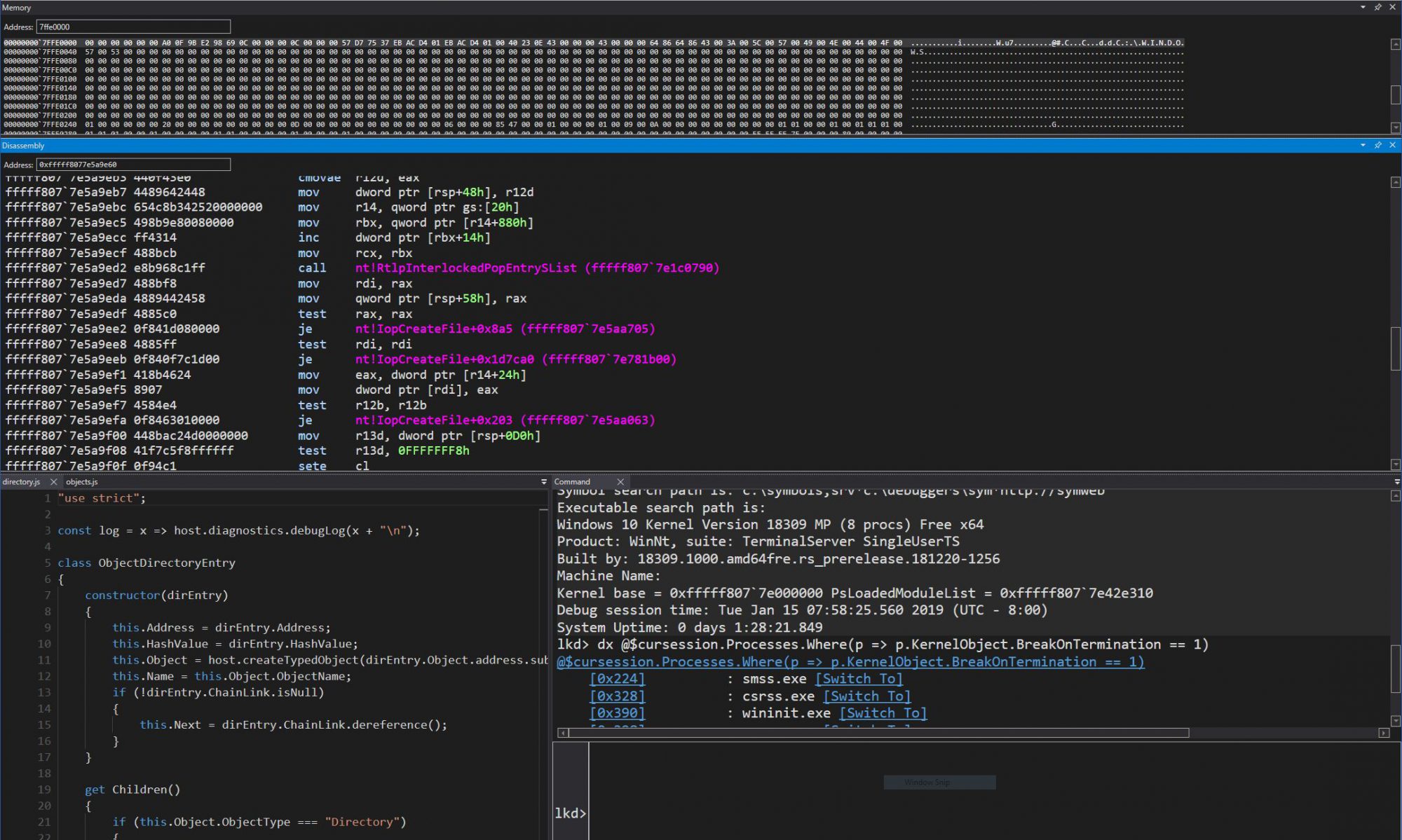

Examples within Microsoft’s Windows are prevalent — pushlocks, fast references, Patchguard DPC contexts, and singly-linked lists are only some of the common Windows mechanisms which utilize bits within a pointer for non-addressing purposes. It is the latter of these which is of interest to us, due to the memory addressing limit it imposed on Windows x64 due to a lack of a CPU instruction (in the initial x64 processors) that the implementation required. First, let’s have a look at the data structure and functionality on 32-bits. If you’re unsure on what exactly a singly-linked list is, I suggest a quick read in an algorithm book or Google.

Here is the SLIST_HEADER, the data structure Windows uses to represent an entry inside the list:

typedef union _SLIST_HEADER {

ULONGLONG Alignment;

struct {

SLIST_ENTRY Next;

USHORT Depth;

USHORT Sequence;

} DUMMYSTRUCTNAME;

} SLIST_HEADER, *PSLIST_HEADER;

Here we have an 8-byte structure, guaranteed to be aligned as such, composed of three elements: the pointer to the next entry (32-bits, or 4 bytes), and depth and sequence numbers, each 16-bits (or 2 bytes). Striving to create lock-free push and pop operations, the developers realized that they could make use of an instruction present on Pentium processors or higher — CMPXCHG8B (Compare and Exchange 8 bytes). This instruction allows the atomic modification of 8 bytes of data, which typically, on a 486, would’ve required two operations (and thus subjected these operations to race conditions requiring a spinlock). By using this native CPU instruction, which also supports the LOCK prefix (guaranteeing atomicity on a multi-processor system), the need for a spinlock is eliminated, and all operations on the list become lock-free (increasing speed).

On 64-bit computers, addresses are 64-bits, so the pointer to the next entry must be 64-bits. If we keep the depth and sequence numbers within the same parameters, we require a way to modify at minimum 64+32 bits of data — or better yet, 128. Unfortunately, the first processors did not implement the essential CMPXCHG16B instruction to allow this. The developers had to find a variety of clever ways to squeeze as much information as possible into only 64-bits, which was the most they could modify atomically at once. The 64-bit SLIST_HEADER was born:

struct {Â // 8-byte header

ULONGLONG Depth:16;

ULONGLONG Sequence:9;

ULONGLONG NextEntry:39;

} Header8;

The first sacrifice to make was to reduce the space for the sequence number to 9 bits instead of 16 bits, reducing the maximum sequence number the list could achieve. This still only left 39 bits for the pointer — a mediocre improvement over 32 bits. By forcing the structure to be 16-byte aligned when allocated, 4 more bits could be won, since the bottom bits could now always be assumed to be 0. This gives us 43-bits for addresses — we can still do better. Because the implementation of linked-lists is used *either* in kernel-mode or user-mode, but cannot be used across address spaces, the top bit can be ignored, just as on 32-bit machines: the code will assume the address to be kernel-mode if called in kernel-mode, and vice-versa. This allows us to address up to 44-bits of memory in the NextEntry pointer, and is the defining constraint of Windows’ addressing limit.

44 bits is nothing to laugh at — they allow 16TB of memory to be described, and thus splits Windows into somewhat two even chunks of 8TB for user-mode and kernel-mode memory. Nevertheless, this is still 16 times smaller then the CPU’s own limit (48 bits is 256TB), and even farther still from the maximum 64-bits. So, with scalability in mind, there do exist some other bits in the SLIST_HEADER which define the type of header that is being dealt with — because yes, there is an official 16-bit header, written for the day when x64 CPUs would support 128-bit Compare and Exchange (which they now do). First, a look at the full 8-byte header:

struct { // 8-byte header

ULONGLONG Depth:16;

ULONGLONG Sequence:9;

ULONGLONG NextEntry:39;

ULONGLONG HeaderType:1; // 0: 8-byte; 1: 16-byte

ULONGLONG Init:1;      // 0: uninitialized; 1: initialized

ULONGLONG Reserved:59;

ULONGLONG Region:3;

} Header8;

Notice how the “HeaderType” bit is overlaid with the Depth bits, and allows the implementation and developers to deal with 16-byte headers whenever they will be put into use. This is what they look like:

struct { // 16-byte header

ULONGLONG Depth:16;

ULONGLONG Sequence:48;

ULONGLONG HeaderType:1; // 0: 8-byte; 1: 16-byte

ULONGLONG Init:1;      // 0: uninitialized; 1: initialized

ULONGLONG Reserved:2;

ULONGLONG NextEntry:60; // last 4 bits are always 0’s

} Header16;

Note how the NextEntry pointer has now become 60-bits, and because the structure is still 16-byte aligned, with the 4 free bits, leads to the full 64-bits being addressable. As for supporting both these headers and giving the full address-space to compatible CPUs, one could probably expect Windows to use a runtime “hack” similar to the “486 Compatibility Spinlock” used in the old days of NT, when CMPXCHG8B couldn’t always be assumed to be present (although Intel implemented it on the 586 Pentium, Cyrix did not). As of now, I’m not aware of this 16-byte header being used.

So there you have it — an important lesson not only on Windows 64-bit programming, but also on the importance of thinking ahead when making potentially non-scalable design decisions. Windows did a good job at maintaining capability and still allowing expansion, but the consequences of attempting to use parts of the non-implemented bits in current CPUs as secondary data may be hard to detect once your software evolves to those platforms — tread carefully.